What Zork can teach us about Google, Amazon, and Apple’s Commitment to Data Privacy.

Data Privacy gets a lot of lip service these days. We have Apple declaring that they bake privacy into every product, Google deciding to delete millions of Google+ accounts (there were millions?) because of a coding bug that potentially exposed private data, and even Zuckerberg’s latest op-ed asking for new rules for the entire internet, which we dissected piece by piece already.

But what are these companies actual commitment to data privacy and trustworthy practices in general?

The Answer Lies in Zork

When I was a kid, I played a lot of video games. One game, in particular, Zork sparked my love of computers and programming. The original Zork game was created by four members of the MIT Dynamic Modeling Group and is a text-based adventure where the program can handle simple verb-noun combinations and respond depending upon what you ask (or type) the character to do. You could command things like “Take”, “Climb”, “Open”, “Close”, “Attack”, and even “Count.” The game would basically return three different types of content:

- a “sorry, I don’t know that word” type of response.

- a hard-coded response

- a random response

By today’s standards, this would barely qualify as a game, but back in the day, this was one of the first and best examples of both random and non-random (hard-coded) responses to questions or commands the world had ever seen. And by the world, I mean my brothers, myself, and the three other kids on our block that played this game from our neighborhood in the 1980s.

Zork, which was distributed by Infocom, sold more than 600,000 copies by 1986. Further, and to demonstrate that I wasn’t the only data geek into this game, the entirety of Zork was hidden as an “Easter egg” in the 2010 game, Call of Duty: Black Ops and also featured prominently in Ernest Cline’s best-selling book (and Spielberg film) Ready Player One.

The game definitely got me and my friends programming similar types of basic games using IF-THEN-ELSE statements and RANDOM counter variables in grammar school. But Zork also taught me just how hard it was to create a compelling computer interaction based upon a human dialogue, no matter how simple the noun-verb pairings may have seemed. The early game authors took the time to build a story and world around objects, inventory, content, actions, and the compass rose. Their computer coding was impressive, given this was 1979, but more impressive is the number of similarities we can see between the different types of responses Zork could provide when compared to cutting edge AI assistants from Google, Apple, Microsoft, and Amazon. Let’s look at the three most common response types in Zork.

Three Levels of Effort

Level 1: Error Messaging

Error messages take many forms these days. There are even sites dedicated to best on-brand 404 ‘Page Not Found’ pages. Back in the days of Zork, however, an error message was the first level of effort and, likely, the most common result when a user entered a verb-noun command.

In these instances I entered into the game, poor Zork doesn’t realize I am singing it’s praise. It responds with “I-don’t-know-the-word [ ______ ]” to essentially any command or word not pre-programmed into the game. The game knows when it doesn’t know something because there is no answer in its code. We see this today every time we ask Google Assistant an overly complex question like “Okay, Google, tell me which subway train is fastest for me to get to my next meeting.” It also happens when companies have specifically chosen to not focus on a particular area of response capabilities but more on that later.

Level 2: Hard-Coded Answers

The second level or response type in Zork is the hard-coded answer. If you ask Zork a verb-noun pairing that is recognized, you will often get an exact response. So “Climb Tree” at the right time resulted in:

This hard-coded answer works the same essentially every time. When you give the command at this exact time, you always get this exact answer. Other commands of this type no matter what time use them, as in “> Swing Axe” results in “Whoosh” every single time.

Today, you will find a lot of hard-coded answers in our AI assistants and platforms. These are sometimes really funny and they definitely reveal how much effort teams at these companies put into dialogue interaction. For example, if you ask Alexa:

Q: Alexa, do you know Siri?

A: Only by reputation.

Level 3: Randomized Answers

At several points in Zork, you may ask a question or command something that returns random answers. For example, at one point in a dungeon room, there is a troll blocking your way. If you say “Attack Troll” or “Kill Troll” you will get one of several different answers. Usually, the troll kills you after several tries, but every once in a while, you randomly get this:

Randomized answers took considerably more effort to encode in 1979 (when this was being developed,) but the result was the same it is today which is the player of the game quickly learns the suspense of waiting for a response and, in this case, the fun of not knowing the outcome. This gamble mentality makes asking the computer a command more engaging and, dare I say, exciting. In Zork, there isn’t a lot of “fairness” as to how the random responses will play out. It can be very frustrating, but honestly, that was always part of the fun of the game, and you could always command “save game” so you didn’t have to start over.

There are many randomized experiences today in our AI Assistants. These are equally fun in that you never know what you might get…

Q: Alexa, tell me a story.

Q: Siri, how old are you?

One Year of Asking “Can I Trust You?” to AI

For over one year now, I have asked Siri, Google Assistant, Cortana, and Alexa various questions about privacy, trust, and data. I have spoken about this at conferences and demonstrated it live onstage many times, and the results are fascinating, particularly when viewed in context against Zork’s three levels of effort in responses to dialogue and commands.

The reason I ask these questions is I am tracking whether or not companies that talk a lot about data privacy and security actually do something about it. With the massive number of resources on each of these AI and intelligent assistant teams, there is no way that better responses to these questions wouldn’t happen unless they just aren’t focused on them.

I’ve asked these platforms probably a hundred different variations on these themes, but I consistently have come back to “Can I trust you?” as one of the most simple and basic questions that a consumer may ask given all of the data privacy issues and news stories about these “always-listening” devices.

At Level 1, an AI essentially doesn’t know what you are asking it. It can’t parse the request or it doesn’t have a routine or data answer to provide. Zork isn’t an AI, but the response of “I don’t know the word [ ______]” is remarkably similar to Alexa’s “Hmm, I’m not sure I know that one.” and Google Assistant’s “I found these results.” Google typically doesn’t tell you it honestly doesn’t understand a request, instead it’s error message returns some search query, which honestly is so much more frustrating in real life. Nevertheless, it is an error response because it is the catch-all for not having an answer.

When I ask each of the platforms “Can I trust you?,” surprisingly, one company above all returns a level 1 Zork answer:

Q: Hey Siri, Can I trust you?

A: I’m not sure I understand.

I got to be honest. Given Tim Cook’s crusade against the Industrial Data Complex, his effort certainly hasn’t made it to the Siri team. For more than a year, this answer has never changed. Siri can tell you with a simple hard-coded answer how she feels about loaning you money but has no response at all for whether or not you can trust that Apple is taking your data privacy seriously.

At Level 2, we have all three of the other assistants returning hard-coded responses to the question. This means that every time you ask the question, you get the same exact answer and shows that these companies have, at the least, decided that this is a common enough request or customer experience need to code a response.

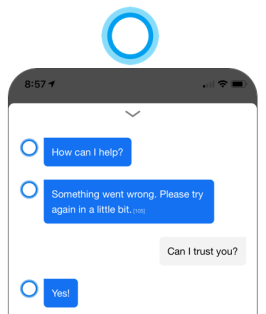

However, the amount of thought that went into each one is literally a window into the depth of their effort. First, Cortana…

Q: Cortana, Can I trust you?

A: Yes!

Really? A one-word response? And what’s with the exclamation point? Cortana, are you trying to convince me or yourself?

Microsoft also had a problem with this question for the last six months where it eerily also returned something like “I can’t connect a the moment, try again later.” This would happen despite having a strong internet connection. So, on the conspiracy-theory side, I have my doubts that this “yes” answer is their only response to this question.

Next up, we have an odd response from Google Assistant…

Q: Okay Google, Can I trust you?

A: Trust is earned. But you can check out Google’s privacy policies

Wow. Trust is earned? That’s a bit of a fortune cookie response, but okay. It’s certainly better than “Yes!”

Here, though, Google at least attempts to point out that you, as the user, are welcome to check out their privacy policies. Of course, there is no link to the privacy policy here, whereas every other response, Google provides links and options, so that is an area of improvement. But also, my general dislike of this stems from the fact that instead of answering with any definition, Google is literally asking you to go read the thing you didn’t read the first time and are incredibly likely not to go read now.

Lastly, and winning the Level 2 Zork award for hard-coded responses is Amazon’s Alexa…

Q: Alexa, can I trust you?

A: I work hard to give you the best information, respond to your commands and maintain your privacy. If there’s a way I can improve, please add feedback in the Help and Feedback section of the Alexa App.

Amazon has clearly thought of their answer. They talk about responding to commands, getting you the best experience and information, and maintaining your privacy. This is the best answer since Will Ferrell debated in Old School against the Ragin Cajun.

In each of these responses, we see a Level 1 or Level 2 effort, as measured on the Zork scale of engagement and effort. Amazon has clearly put a lot more effort into their response, but not programmatically. They essentially just stored a much longer (and better) description of their mission as it relates to users and their trust.

Words Without Deeds

The sad reality is that in every instance of asking questions of our current AI Assistants, they fall short. In a simple, Zork-like scenario, Apple provides the worst experience, but honestly, most of these other responses are still robotic, Level 2, hard-coded responses.

We must do better.

If Artificial Intelligence doesn’t help protect data privacy and more easily give us control of our data, it will create far more problems than it solves. In order for this to improve, we need to move beyond words to actions. Actual deeds.

Q: Alexa, delete my voice history.

Q: Ok Google, delete my location history.

Q: Hey Siri, turn off my location services.

Q: Cortana, delete my browsing history.

Not surprisingly, none of these requested commands or actions work. Most return Level 1 error responses or catch-all search results. We can order paper towels, make reservations for a haircut, learn how to cook anything with a simple request, but when we ask one of these AI tools to help protect our data privacy there has been no development. No effort whatsoever.

In Zork, you could always type “Restart.” This one-word command would show you your score, delete everything it had stored up until that point, and start again anew. Maybe it’s time for Google, Amazon, Microsoft, and Apple to follow the example of Zork and give data privacy much more than just lip service.

P.S. You can try Zork online for free here.